Key points

- R provides multiple methods to import data files in R, making it a versatile tool for data analysis.

- Efficient CSV Import Methods: Different functions like read.csv, read_csv, and fread cater to different dataset sizes and performance needs.

- Excel File Handling: The readxl package simplifies importing data from Excel files, supporting both .xls and .xlsx formats.

- Database Connectivity: Using DBI and RMySQL packages, R can connect to SQL databases, facilitating direct data import for analysis.

- Web API Integration: The httr package allows R to fetch and import data from web APIs, enabling real-time data integration into analyses.

Have you ever wondered how the ability to import data from multiple sources seamlessly can transform your data analysis projects? Imagine the possibilities of effortlessly integrating data from Excel files, SQL databases, and web APIs into your R environment. This blog will answer your questions and empower you with practical techniques to elevate your data import skills. Ready to dive in and unlock the full potential of R programming? Let’s get started!

Importance of Data Import in R

Importing data into R is a fundamental step in any data analysis project. You cannot leverage its powerful statistical and graphical capabilities without the ability to bring data into R. Efficient data import ensures that your analysis is based on accurate and complete data, which is crucial for making informed decisions. Whether working with small datasets or large, complex data structures, mastering data import techniques in R will significantly enhance your productivity and the quality of your analyses.

Types of Data Formats That Can Be Imported into R

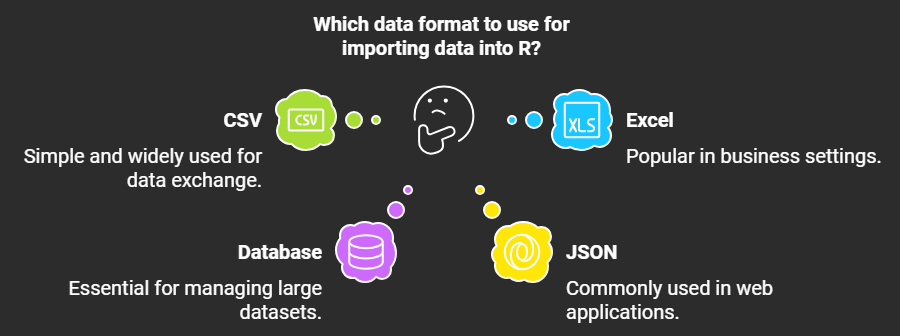

R supports a wide range of data formats, making it a versatile tool for data analysis. Standard formats include CSV files, Excel spreadsheets, databases, JSON files, and web APIs. Each format has its advantages and use cases. For instance, CSV files are simple and widely used for data exchange, while Excel files are popular in business settings. Databases are essential for managing large datasets, JSON files are commonly used in web applications, and web APIs allow for real-time data integration. Understanding how to import these different formats into R is essential for any data analyst.

| Data Type | Library | Function | When to Use | Not Use | Comparison with Other Methods |

| CSV | Base R | read.csv

|

Small to medium datasets, simple usage | Large datasets, performance-critical tasks | Slower than read_csv and fread, but straightforward for basic tasks

|

| CSV | readr

|

read_csv

|

Medium to large datasets, need for speed | Very large datasets, extremely large files | Faster than read.csv, more modern and flexible

|

| CSV | data.table

|

fread

|

Very large datasets, performance-critical tasks | Small datasets, simple usage | Fastest method, highly optimized for large data |

| Excel | readxl

|

read_excel

|

Importing .xls and .xlsx files, multiple sheets

|

Very large Excel files, complex data manipulations | Simple and efficient, no external dependencies |

| Database | DBI + RMySQL

|

dbConnect, dbGetQuery

|

Direct database access, SQL queries | Non-SQL databases, complex database operations | Provides direct access to SQL databases, integrates well with R |

| JSON | jsonlite

|

fromJSON

|

Hierarchical data, web data | Very large JSON files, complex nested structures | Easy to use, converts JSON to R data frames efficiently |

| Web API | httr

|

GET, content

|

Real-time data, online sources | Offline data, non-HTTP APIs | Simplifies HTTP requests, integrates web data into R |

The blog aims to equip you with the knowledge and skills to import various data formats into R. We will cover detailed methods for importing CSV files, Excel files, databases, JSON files, and web APIs. Each section will provide practical examples and code snippets to help you understand and apply these techniques in your projects. By the end of this blog, you will be confident in your ability to import data into R, regardless of the format or source. This will enable you to focus on analyzing and interpreting your data rather than struggling with data import issues.

.png)

Importing CSV Files

Explanation of CSV Files and Their Common Usage

CSV (Comma-Separated Values) files are a popular format for storing tabular data. They are widely used because they are simple to create and can be opened with various software, including spreadsheet programs like Microsoft Excel and Google Sheets. In R programming, CSV files are commonly used to import data for analysis. Each line in a CSV file represents a row in the table, and a comma separates each value. This format is handy for data exchange between different systems.

Method 1: Using read.csv from Base R

The read.csv function from Base R is a straightforward way to import CSV files. It is suitable for smaller datasets and is easy to use. The function reads the CSV file and converts it into a data frame. Here’s how you can use it with the mtcars dataset:

data1 <- read.csv("path/to/your/file.csv", header=TRUE, stringsAsFactors=FALSE)

head(data1)Method 2: Using read_csv from the readr Package

The read_csv function from the readr package is faster than read.csv and is better suited for larger datasets. It provides more options for handling different data types and missing values. Here’s how to use it:

library(readr)

data2 <- read_csv("path/to/your/file.csv")

head(data2)Method 3: Using fread from the data.table Package

Related Posts

The fread function from the data.table package is the fastest method for importing large CSV files. It is highly optimized and can handle extensive datasets efficiently. Here’s an example:

library(data.table)

data3 <- fread("path/to/your/file.csv")

head(data3)By understanding these methods, you can choose the most appropriate one based on your dataset size and requirements. Each method has advantages, making R programming versatile for data import tasks.

Importing Excel Files

Explanation of Excel Files and Their Common Usage

Excel files, commonly saved with extensions like .xls or .xlsx, are widely used for storing and sharing tabular data. They are popular in various fields, including business, education, and research, due to their ease of use and ability to handle large datasets with multiple sheets. In R programming, importing data from Excel files is a common task, especially when dealing with data collected from different sources. Excel files can contain multiple sheets, each with its own data set, making them versatile for organizing complex datasets.

Using the readxl Package

The readxl package in R is a powerful tool for importing Excel files. It supports both .xls and .xlsx formats and does not require external dependencies, making it easy to install and use across different operating systems. The read_excel function from this package is used to read data from Excel files into R. Here’s how you can use it with the mtcars dataset:

# Install and load the readxl package

install.packages("readxl")

library(readxl)

# Import data from an Excel file

data <- read_excel("path/to/your/file.xlsx", sheet = "Sheet1")

head(data)Importing Data from Databases

Explanation of Database Connections and Their Importance

Database connections are crucial for accessing and managing data stored in relational databases. They enable applications to communicate with database servers, allowing for the execution of SQL queries, data retrieval, and manipulation. A reliable database connection ensures data integrity, security, and performance. In R programming, connecting to databases allows users to import large datasets directly into R for analysis, making it a powerful tool for data scientists and analysts. Proper database connections also facilitate efficient data management and integration across different systems, enhancing decision-making and operational efficiency.

Using DBI and RMySQL Packages

The DBI and RMySQL packages in R provide a robust framework for connecting to MySQL databases. The DBI package offers a consistent interface for database operations, while RMySQL provides the necessary drivers to connect to MySQL databases. Here’s how you can use these packages to import data from a MySQL database:

# Install and load the necessary packages

install.packages("DBI")

install.packages("RMySQL")

library(DBI)

library(RMySQL)

# Establish a connection to the MySQL database

con <- dbConnect(RMySQL::MySQL(),

dbname = "your_db",

host = "your_host",

user = "your_user",

password = "your_password")

# Import data from a specific table

data <- dbGetQuery(con, "SELECT * FROM your_table")

head(data)

# Disconnect from the database

dbDisconnect(con)Importing JSON Files

Explanation of JSON Files and Their Common Usage

JSON (JavaScript Object Notation) files are a lightweight data-interchange format that is easy for humans to read and write and for machines to parse and generate. JSON is commonly used for transmitting data in web applications, APIs, and configuration files. In R programming, importing JSON files is essential for working with data from web services and APIs. JSON’s hierarchical structure allows for representing complex data relationships, making it a versatile format for data exchange.

Using the jsonlite Package

The jsonlite package in R provides functions to parse JSON data and convert it into R data frames. The fromJSON function is used to read JSON files and convert them into R objects. Here’s how you can use it:

# Install and load the jsonlite package

install.packages("jsonlite")

library(jsonlite)

# Import data from a JSON file

data <- fromJSON("path/to/your/file.json")

head(data)Importing Data from Web APIs

Explanation of Web APIs and Their Importance

Web APIs (Application Programming Interfaces) are essential tools in modern web development. They allow different software applications to communicate with each other, enabling the exchange of data and functionality. APIs are crucial for integrating various services, such as retrieving data from web servers, accessing third-party services, and automating workflows. In R programming, APIs fetch data from online sources, making it possible to incorporate real-time data into your analyses. This capability is precious for applications that require up-to-date information, such as financial analysis, weather forecasting, and social media monitoring.

Using the httr Package

The httr package in R provides a user-friendly interface for working with web APIs. It simplifies the process of sending HTTP requests and handling responses. Here’s how you can use the httr package to fetch data from a web API:

# Install and load the httr package

install.packages("httr")

library(httr)

# Send a GET request to the API

response <- GET("https://api.example.com/data")

# Parse the response content

data <- content(response, "parsed")

head(data)Conclusion

In this blog, we explored various methods to import data into R programming. We started with importing CSV files using read.csv, read_csv, and fread, each offering different advantages based on dataset size and complexity. Next, we discussed importing Excel files using the readxl package, which is efficient and straightforward for handling .xls and .xlsx formats. We then covered importing data from databases using the DBI and RMySQL packages, which facilitate seamless data retrieval from SQL databases. Additionally, we looked at importing JSON files using the jsonlite package, ideal for working with hierarchical data structures. Finally, we explored importing data from web APIs using the httr package, enabling real-time data integration from online sources.

I encourage you to practice these methods using the provided examples to become proficient in data import techniques in R. Experimenting with different data formats and sources will enhance your data analysis skills and make your workflow more efficient.

If you have any questions or comments, feel free to leave them below. Your feedback is valuable, and I’m here to help you with any further queries. Happy coding!

Transform your raw data into actionable insights. Let my expertise in R and advanced data analysis techniques unlock the power of your information. Get a personalized consultation and see how I can streamline your projects, saving you time and driving better decision-making. Contact me today at contact@rstudiodatalab.com or visit to schedule your discovery call.

.webp)