Your statistical model is built, and your p-values are perfect, but is your conclusion valid? What if a single, overlooked duplicate entry in your dataset is silently skewing your results, leading to flawed insights? How can you be certain that the data you're analyzing is clean, accurate, and trustworthy?

The key to data integrity lies in identifying and managing redundancies. R provides a powerful, built-in tool: the duplicated() function in R. It scans a vector or data frame and determines which elements are duplicates of entries that appeared earlier. It returns a logical vector (TRUE/FALSE) of the same length as your input, where TRUE marks an element as a duplicate. Learning how to use the duplicated function isn't just a programming trick; it's a fundamental step in pre-processing that ensures the validity of your entire data analysis. It's your first line of defense against the kind of data errors that can lead to skewed results and compromise your research.

Key Points

- Find Duplicates Instantly: The duplicated() function is your go-to tool in R to find copied data. It scans your data and tags every repeated entry as TRUE, making it simple to spot duplicates.

- Remove Duplicates with One Simple Trick: To get a clean dataset, just add a ! before the function. This single line of code keeps only the unique rows and is the fastest way to clean your data.

# This is the most common way to get a clean data frame

cleaned_df <- df[!duplicated(df), ]

- Define Your Own Duplicates: You don't have to check the entire row. You can instruct R to search for duplicates based only on specific columns, such as CustomerID or Email, giving you complete control over your data cleaning.

- Write Cleaner Code with dplyr: If you like tidy code, use dplyr::distinct(). It performs the same function as duplicated(), but is often easier to read and integrates seamlessly into modern data analysis workflows.

- Always Look Before You Leap: Never delete rows without checking them first. A quick visualization or summary can prevent you from accidentally removing essential data. Clean data is excellent, but valid data is even better.

Why a Data Analyst Must Learn Duplicate Detection

Imagine you're analyzing customer data for a marketing campaign. You see two entries for the same CustomerID. Is this a loyal customer who made two purchases, or is it a data entry error? Failing to address such issues can completely throw off your analysis.

A simple duplicate row can inflate your customer count, skew sales totals, and lead to poor business decisions. This is a common problem in data analysis, but thankfully, R has a simple, built-in solution. The duplicated() function in R is a powerful tool within base R designed to find and help you manage these unwanted copies. This guide will walk you through everything you need to know, from the basic syntax to advanced, real-world examples, so you can ensure the integrity of your data.

The duplicated() Function: Syntax and Core Arguments

Before we start, let's understand how the function is structured. At its heart, the function is simple and designed for one core purpose: to determine which elements in your data are duplicates. Its syntax is duplicated(x, incomparables = FALSE, fromLast = FALSE, ...). While it looks technical, each part has a specific job that gives you control over how R identifies a duplicate. Understanding these arguments is the first step to leveraging its full power for clean and reliable data.

| Argument | What It Does |

|---|---|

x |

The data you want to check. This can be a simple vector or a whole data frame. |

fromLast |

A logical switch (TRUE/FALSE). It tells R whether to start checking for duplicates from the start or the end of your data. |

incomparables |

A vector of values that you want the function to ignore. This argument is typically reserved for exceptional cases and is not commonly employed. |

The x Argument

The x argument is simply the data you want to investigate. It is incredibly flexible and can be almost any data object in R. For instance, you could check for duplicate ages in your customer data by pointing x to a single column, which is a vector. Or, you could check for completely identical customer records by pointing x to your entire data frame. This versatility is what makes the function so useful for a wide range of data cleaning tasks, whether you're working with a simple list of numbers or a complex, multi-column dataset.

# Example: Using a single column (vector) as the 'x' argument

customer_ages <- df$Age

duplicated(customer_ages)

# Example: Using the entire data frame as the 'x' argument

duplicated(df)

The fromLast Argument

By default, duplicated() marks the second, third, and subsequent occurrences of a value as TRUE. But what if you want to find the first occurrence instead? This is where the fromLast argument comes in. It's a logical indication of whether duplication should be considered from the reverse side. When you set fromLast = TRUE, the function starts from the end of your data and works backward. It effectively flags all but the last occurrence of a duplicate element. Helpful for analyses where the last known record is considered the most current or relevant one.

Let's see how it works on the Age column in our dataset, which we know has duplicate values.

# Default: fromLast = FALSE (marks the second '49' as the duplicate)

df$IsDuplicate_Default <- duplicated(df$Age)

# Using fromLast = TRUE (marks the first '49' as the duplicate)

df$IsDuplicate_FromLast <- duplicated(df$Age, fromLast = TRUE)

head(df)The output shows how the TRUE value shifts depending on the fromLast setting, giving you precise control over which duplicate you target.

The incomparables Argument

The incomparables Argument is a more specialized tool. Its usage is for situations where you want the duplicated() function to ignore a specific value when checking for duplicates. For example, if your dataset uses a placeholder like "Not Available" or NA for missing information, you might not want to treat all "Not Available" entries as duplicates of each other. By passing that value to the incomparables argument, you tell R that these specific values can be compared, but they should never be marked as duplicates. While you won't use it in most day-to-day data cleaning tasks, knowing it exists can be a lifesaver in unique scenarios where you need to exclude specific data points from the duplication check.

incomparables_check <- duplicated(df$Gender, incomparables = "Male")

incomparables_check

Practical Application: Working with Vectors

Before tackling entire datasets, it’s best to start with the basics. A vector is the simplest data structure in R, essentially a single list of values, like one column from a spreadsheet. The duplicated function works perfectly on vectors to help you find repeated entries. Whether you're working with numbers or text, providing these clear, copy-paste-ready base R with examples will help you easily determine duplicate elements in any list of data. This is a foundational skill for cleaning data at the most granular level.

Identifying Duplicates in a Numeric Vector

Let's start with numbers. Imagine you want to see if any customers in your dataset share the same age. We can pull the Age column into a numeric vector and run the duplicated() function on it. The function will scan the list from top to bottom and return TRUE for every age it has already seen. This simple check is a quick way to understand the distribution and repetition within your numerical data.

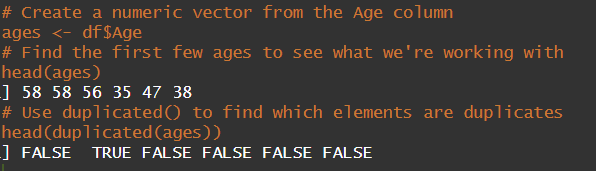

# Create a numeric vector from the Age column

ages <- df$Age

# Find the first few ages to see what we're working with

head(ages)

# Use duplicated() to find which elements are duplicates

head(duplicated(ages))In the output, the second 58 is marked as TRUE because it's a duplicate of the first one. All other ages are marked FALSE because they are the first time they appear in the list.

Finding Duplicates in a Character Vector

The function works just as well on text data. Let's check for repeated entries in the PolicyType column. This is useful for understanding which categories might be over-represented or if there are data entry errors. The logic is identical: the function reads through the list of policy types and flags any that have appeared before. This helps you quickly spot and analyze repetitions in categorical data.

# Create a character vector from the PolicyType column

policy_types <- df$PolicyType

# Check the first few policy types

head(policy_types)

# Use duplicated() to find which elements are duplicates

head(duplicated(policy_types))

How to Extract Unique Elements

Identifying duplicates is useful, but often, your goal is to create a clean list containing only the unique values. This is where the! (NOT) operator becomes your best friend. By adding ! before the duplicated() function, you flip the TRUEs and FALSEs. This means you can easily filter your vector to keep only the first occurrence of each element, giving you a list of unique values. It's the most common and practical way to use the function's output.

{# Create a character vector of policy types

policy_types <- df$PolicyType

# Use the '!' operator to select only the non-duplicate elements

unique_policies <- policy_types[!duplicated(policy_types)]

print(unique_policies)}The Most Common Use Case: Finding Duplicate Rows in a Data Frame

While checking a single vector is helpful, the real power of duplicated() shines when working with a data frame. In most data analysis, a duplicate isn't just a repeated value but an entire row that has been copied. These duplicate rows can significantly harm your analysis, and identifying them is a crucial step in data cleaning. The function makes it easy to scan your entire dataset for these identical entries.

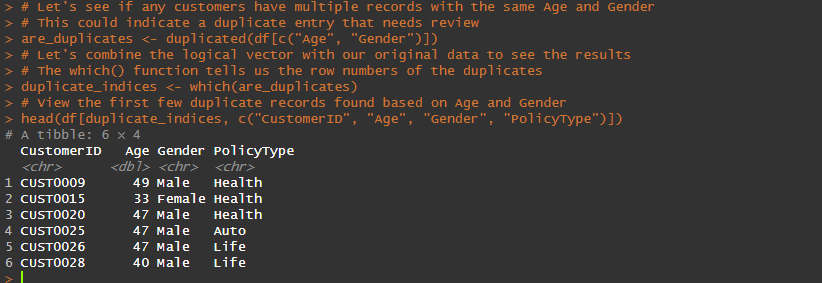

# Let's see if any customers have multiple records with the same Age and Gender

# This could indicate a duplicate entry that needs review

are_duplicates <- duplicated(df[c("Age", "Gender")])

# Let's combine the logical vector with our original data to see the results

# The which() function tells us the row numbers of the duplicates

duplicate_indices <- which(are_duplicates)

# View the first few duplicate records found based on Age and Gender

head(df[duplicate_indices, c("CustomerID", "Age", "Gender", "PolicyType")])

Advanced Usage and Tidyverse Alternatives

Once you have mastered the basics of finding and removing duplicates, it's time to explore more efficient and modern techniques. While the duplicated() function is a reliable tool in base R, knowing its alternatives can make your code faster, more readable, and better suited for specific tasks. Going beyond the basics showcases a deeper understanding of R's ecosystem and is essential for anyone working with complex or large datasets. These expert-level methods will help you write more professional and effective data-cleaning scripts.

Sometimes, you don't need a complete list of every duplicate. You need a quick yes-or-no answer: does this dataset contain any duplicates at all? For this, R provides a highly efficient function called anyDuplicated(). Instead of returning a long logical vector for every single row, it scans the data and stops as soon as it finds the first duplicate. If no duplicates are found, it returns 0. If it finds a duplicate, it returns the index of the first duplicate it encounters. This is much faster and uses less memory, making it the ideal tool for a quick preliminary check on the integrity of your data.

# Check if there are any duplicate rows in the entire data frame

anyDuplicated(df) # This will likely return 0 if the original df is clean.

# Let's check a column we know has duplicates, like 'Age'

anyDuplicated(df$Age)For those who prefer the readable, pipe-based syntax of the Tidyverse, the dplyr It offers a fantastic alternative: distinct(). This function is specifically designed to handle duplicate rows in a data frame and is often considered more intuitive. Its primary job is to keep only the unique or distinct rows. You can apply it to the entire data frame or specify certain columns to consider when identifying duplicates. This makes the code for removing duplicate entries clean, easy to read, and simple to integrate into a larger dplyr workflow.

# First, ensure you have dplyr installed and loaded

# install.packages("dplyr")

library(dplyr)

# Remove duplicate rows based on CustomerID and PolicyType

# The .keep_all = TRUE argument ensures we keep all other columns in the data frame

cleaned_df_dplyr <- df %>%

distinct(CustomerID, PolicyType, .keep_all = TRUE)

# View the dimensions of the original and cleaned data frames to see the effect

print(paste("Original rows:", nrow(df)))

print(paste("Rows after distinct:", nrow(cleaned_df_dplyr)))Performance on a Large Set of Data

When you're working with small or medium-sized datasets, the performance differences between methods are negligible. However, when your data frame grows to millions or even tens of millions of rows, speed becomes a critical factor. Whilebase R's duplicated() The function is optimized and relatively fast, but it can become slow with very large datasets. In these high-performance scenarios, the data.table package is the undisputed champion. Its specialized functions for finding and removing duplicates are designed for maximum speed and memory efficiency, often outperforming both base R and dplyr by a significant margin. For actual big data tasks in R, it data.table is an essential tool.

Visualizing Duplicates Before Removal

A great way to "inspect your data" is to visualize it. Instead of just deleting duplicates, first, create a summary to see how many there are and where they occur. A simple bar chart can give you a clear picture of the problem. You could plot the number of duplicate entries found for each PolicyType. This provides a quick diagnostic view of your dataset, helping you understand the scale of the issue before taking action. This approach is less about complex coding and more about making informed, data-driven decisions.

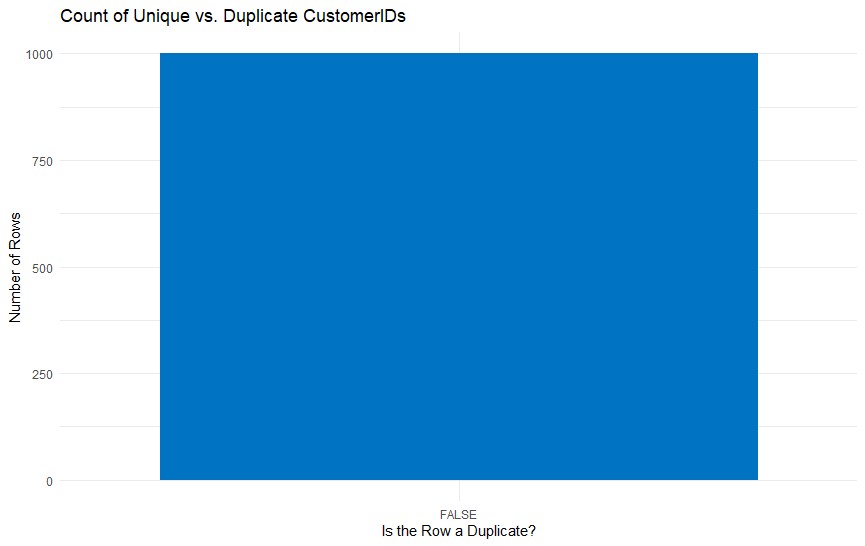

# First, ensure you have ggplot2 installed and loaded

library(ggplot2)

# Create a data frame that shows which rows are duplicates based on CustomerID

df$Is_Duplicate <- duplicated(df$CustomerID)

# Use table() for a quick text-based summary

print(table(df$Is_Duplicate))

# Create a bar chart to visualize the counts

ggplot(df, aes(x = Is_Duplicate)) +

geom_bar(fill = "#0073C2") +

labs(title = "Count of Unique vs. Duplicate CustomerIDs",

x = "Is the Row a Duplicate?",

y = "Number of Rows") +

theme_minimal()Conclusion

Learning R's duplicated() function is more than just learning a piece of code; it's about taking full control of your data's quality. We've journeyed from understanding its core syntax and arguments to applying it confidently on simple vectors and complex data frames. You now have the power to move beyond basic checks and precisely remove duplicate rows based on the specific columns that matter to your research, a crucial skill for any real-world data analysis. By exploring faster alternatives like anyDuplicated() and the highly readable dplyr::distinct(), you are now equipped for any scenario, from a quick data health check to a large, complex project.

Ultimately, the most critical takeaway is to embrace the best practices we discussed. Visualizing potential duplicates and carefully inspecting your data before removing anything is what separates a good analyst from a great one. Don't wait—apply these techniques to your current project today. Here’s a final piece of advice: make duplicate detection a mandatory, non-negotiable step in your workflow. The most powerful and accurate insights always begin with data you can absolutely trust.

Frequently Asked Questions (FAQs)

What does the duplicated() function do in R?

The duplicated() function in R is a tool used to find repeated values in your data. When you give it a list (vector) or a whole table (data frame), it checks every single entry and returns a list of TRUE or FALSE values. An entry is marked as TRUE if it's an exact copy of one that appeared earlier in the list, and FALSE if it's the first time the value has appeared. It's a fundamental tool for data cleaning.

What is the replicate() function in R?

The replicate() function is used when you want to repeat an action or an entire expression multiple times. It's different from just copying a value. Think of it as running the same process over and over again, which is very useful in simulations or statistical experiments. For example, you could use it to roll a die 100 times and store all the results. It helps you see how results can vary when you repeat the same task.

How to check for duplicates in R?

The best way to check for duplicates in R is to use the duplicated() function. If you want to see which specific rows are copies, you apply it directly to your data frame. For a quick check to see if any duplicates exist at all, the anyDuplicated() function is faster because it stops as soon as it finds the first one. You can also use table() on a column to see a count of each value, which can help you spot duplicates manually.

What is the difference between duplicated() and unique() in R?

The main difference between duplicated() and unique() is what they give you back. The duplicated() function gives you a TRUE/FALSE report for every single item in your original data, telling you which ones are repeats. In contrast, the unique() function throws away all the duplicates and gives you a new, shorter list containing only one copy of each value.

How do I find duplicate rows in an R data.table?

When you're using the high-performance data.table package, finding duplicate rows is very similar to base R but much faster. You can use the duplicated() method specifically designed for data.table objects. You can check the entire table for identical rows or specify a few key columns to define what you consider a duplicate. For removing them, the unique() function for data.table is also highly optimized.

What is the repetition function in R?

The main repetition function in R is rep(). This function is used to repeat values or a sequence of values. You can use it to do simple things like creating a vector of ten 5s, or more complex tasks like repeating a whole sequence multiple times. It's very flexible for creating patterns or long vectors from a shorter set of elements.

How do you replicate a data frame in R?

To "replicate" or copy a data frame in R, the simplest way is to just assign it to a new variable, like new_df <- old_df. This creates an independent copy. If you want to create many copies in a list, perhaps for a simulation where you modify each one slightly, you can use the replicate() function. For example, replicate(5, old_df, simplify = FALSE) would give you a list containing five identical copies of your original data frame.

What does distinct() do in R?

The distinct() function is part of the popular dplyr package and is used to remove duplicate rows from a data frame. It works like unique() but is often considered easier to read and use within a "Tidyverse" workflow. You can use it to find unique rows based on all columns or specify certain columns to look at when identifying duplicates. It's a modern and very readable way to clean your data.

How to filter out duplicates in R?

The most common way to filter out duplicates is to combine the ! (NOT) operator with the duplicated() function. The code df[!duplicated(df), ] reads as "select all rows from the data frame df that are not duplicates." This keeps the first occurrence of every unique row and discards the rest. An alternative is to use the unique(df) function, which achieves the same result, or dplyr::distinct(df) if you are using the Tidyverse.

What does the transpose() function do in R?

The transpose function in R, which is called using t(), is used to flip a data frame or matrix. It turns the rows into columns and the columns into rows. For example, if you have a table with 5 rows and 3 columns, using t() on it will result in a new table with 3 rows and 5 columns. It's useful for reshaping your data for certain types of analysis or plotting.

What does the ifelse() function do in R?

The ifelse() function performs a simple test on every element in a vector and returns a value depending on the outcome. You give it a test, a value to return if the test is TRUE, and a value to return if the test is FALSE. For example, you could use it on a vector of ages to create a new category, assigning "Adult" to anyone over 18 and "Minor" to everyone else. It's a fast way to create a new variable based on a condition.

Is messy data or complex R code getting in your way? Don't let hidden duplicate entries compromise your thesis, dissertation, or critical business insights. The experts at RStudioDatalab are here to help you cut through the noise. We provide clear, one-on-one support via Zoom, chat, or email to help you clean your datasets and perfect your analysis. Our promise is simple: unlimited revisions and 100% human expertise—no AI shortcuts. Contact me today at contact@rstudiodatalab.com or visit to schedule your discovery call.